Preparing for LISA with the Cosmology Data Centre

Cosmology has historically been driven by theoretical modelling, while observations of the cosmos have been limited by the available technology. Thanks to incredible advances in technology over the last decades, we are now in the era of data-driven cosmology: we have more cosmological data available than ever before, and this is only expected to increase in the coming years, with ongoing missions like Euclid and the Vera Rubin Observatory set to produce terabytes of data annually. Future space missions are also poised to obtain a wealth of ever more precise cosmological data, making cosmological breakthroughs increasingly more likely.

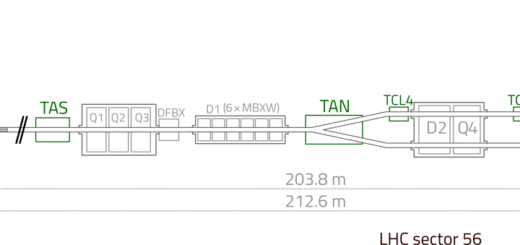

The Laser Interferometer Space Antenna (LISA) is an upcoming ESA space-based gravitational wave mission, scheduled for launch in 2035. LISA will search for gravitational waves coming from various astrophysical and cosmological sources; ranging from dramatic events such as supermassive black holes merging in our galactic neighbourhood to processes taking place billions of years ago when the universe was less than a second old. In practice, LISA will measure a time series, which will include instrument noise and various overlapping gravitational wave signals, as well as possible data gaps and glitches. We expect LISA to take data for four years, with a sample rate of 5 seconds, resulting in millions of data points. Analysing this data requires an enormous amount of computational resources.

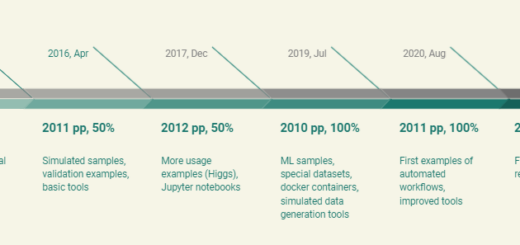

While this may seem like a problem for 10 years from now, we already need to start preparing to ensure we will be ready to take full advantage of this wealth of cosmological data. Indeed, the software that will be used to analyse and process this data is already under development. In order to properly test our analysis pipelines, we make use of mock data – simulated data that tries to mimic what LISA might see, with increasing complexity in the number and type of signals. Producing and analysing these mock datasets also requires dedicated computational resources. These growing computational demands pose a challenge for the LISA mission, and indeed for all ongoing and future space missions.

To overcome this, we make use of data centres spread across the world, tasked with handling the computational demands of the LISA mission. The LISA data centres, collectively managed by the LISA Distributed Data Processing Centre, constitute a key component of the so-called ground segment for LISA – meaning they are indispensable for the success of the LISA mission. Before mission launch in 2035, the plan is to have 12 data centres available for LISA. Currently only a few data centres are up and running and providing computational resources, including one in Finland.

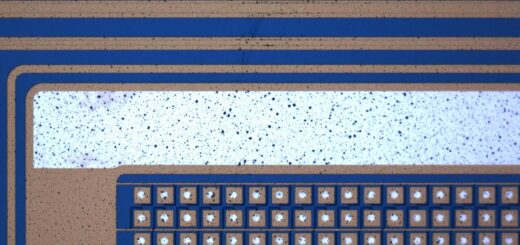

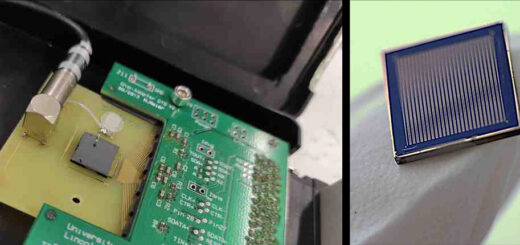

The Cosmology Data Centre Finland (CDC-FI), which was recently selected to the Roadmap of National Research Infrastructures 2025-28, acts as a data centre for both LISA and Euclid, providing Finland’s contribution to both of these missions. The CDC-FI consortium consists of the University of Helsinki, the University of Turku, the University of Oulu, Aalto University, HIP, and CSC – IT Center for Science. The hardware for CDC-FI is hosted by CSC at the Kajaani data centre – which is one of the most environmentally friendly data centers in the world. We rely on CSC’s expertise to manage the physical hardware, while the LISA and Euclid system administrators handle the interfaces with the dedicated mission software. This setup is what enabled the quick deployment of CDC-FI, compared to e.g. other LISA data centres, as we already have existing infrastructure, which is supplemented by a dedicated hardware acquisition for CDC-FI.

CSC provides flexibility in terms of the specific resources used, with CPUs, GPUs, cloud services and storage options available. This in turn allows flexibility in the concrete resources CDC-FI offers for LISA: we can adjust the specific resources we are contributing based on the exact needs of the LISA Distributed Data Processing Centre. In the current stage of development and prototyping for LISA, the main tasks for the data centres revolve around software development, data production and analysis, and storage management. The tasks are shared between the different data centres based on resources available. Given both the resources available at CDC-FI and our readiness to provide these resources, we are currently mainly responsible for hosting the ongoing data production.

As we do not yet have real LISA data, we rely on mock datasets that closely mimic what we expect LISA to see in the future. We can inject specific gravitational wave signals into this mock data, with the goal of then analysing the data and recovering the injected signals. By injecting different signals, we can test our understanding of the underlying astrophysical and cosmological models, and confirm we have the numerical tools needed to describe these models. We can then assess our ability to process such large amounts of data in an efficient and reproducible manner. Finally, by recovering the signals we injected in the data, we can ascertain our ability to successfully extract information from the data, which is especially complicated when we have many overlapping signals, as we expect to see in LISA.

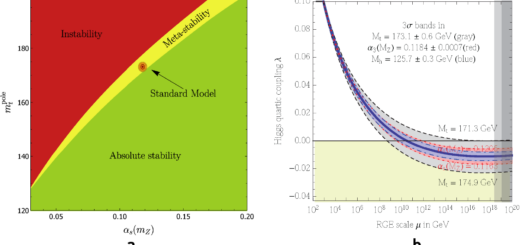

The production of mock datasets for LISA is not new – indeed this was already performed with the LISA Data Challenges, which were a series of blind challenges of increasing complexity designed to support the development, testing and deployment of a wide variety of data analysis techniques that will be used with future LISA data. The next dataset, codenamed Mojito, will be produced and hosted on CDC-FI, and it will be the most ambitious data challenge yet: it will be a two year dataset containing many possible gravitational wave signals, which can be added together in various levels of complexity. One of the signals planned to be included in Mojito is a stochastic gravitational wave signal produced by first-order phase transitions in the early universe – which is a topic on which the Computational Field Theory group in Helsinki has world-leading expertise. This guarantees that our scientific interests are also fairly represented in the next LISA dataset.

The amount of cosmological data we expect to obtain in the coming years brings the tantalising prospect of cosmological breakthroughs, while also bringing new challenges. In order to fully unlock the potential of this data, we need a lot of computational resources, as well as experts in data analysis and cosmological software development. This will require extensive international collaboration, and CDC-FI ensures that Finland will play an important role in this exciting journey.

Deanna C. Hooper

University Researcher, Helsinki Institute of Physics, University of Helsinki