The HIP new dCache disk storage

Helsinki Institute of Physics (HIP) participates in the Large Hadron Collider (LHC) experiments ALICE, CMS and TOTEM. HIP collaborates with CSC – IT Center for Science on providing Worldwide LHC Computing Grid (WLCG) resources. The ALICE resources are part of the Nordic distributed Tier-1 resource NDGF and the CMS resources form a CMS Tier-2 called T2_FI_HIP. The ALICE and CMS HIP disk storage hardware and software was recently upgraded. For storing the data we use a middleware called dCache that is developed by Deutsches Elektronen-Synchrotron (DESY) in Germany, the Fermi National Accelerator Laboratory (FNAL) in the US and the Nordic e-Infrastructure collaboration (NeIC) in the Nordics. The dCache storage upgrade and its performance was presented in a poster at the Physics Days 2024 in Helsinki in March.

dCache is a grid middleware for storing and retrieving huge amounts of data, distributed among a large number of heterogenous server nodes, under a single virtual filesystem tree with a variety of standard access protocols. Data on the HIP dCache is accessed through the WebDAV (http) and XRootD protocols. WebDAV is an extension to the http protocol allowing also writing of data. XRootD is a protocol developed for fast transfers of large data samples in High Energy Physics. CSC hosts and maintains the HIP ALICE and CMS dCache storage.

The new dCache system was funded by a Research Council of Finland FIRI grant. The tendering process was run by the University of Helsinki and the total investment and operational costs were minimized by requiring a compact system. The new system is located in CSC Kajaani data center and it is remotely operated from Espoo by CSC personnel.

Ansible is a configuration management tool based on Python and SSH. The ALICE dCache installation was simply done by updating the NDGF Ansible scripts, installing the new disk pools, draining the old pools and migrating the data to the new pools The CMS installation was done from scratch for a clean new configuration, so it took more time to do.

The previous dCache system consisted of eight HPE Apollo 4510 G9 4U servers with a total raw size of 2176 TB located in Keilaniemi in Espoo. The old dCache has been moved to Kumpula campus for interactive CMS data analysis usage.

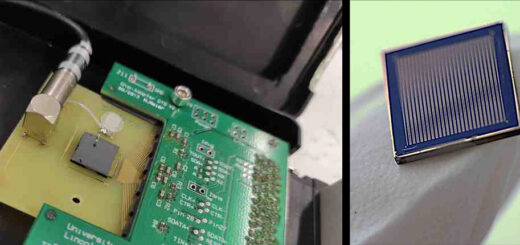

The new dCache system hardware consists of dCache head nodes which are two DELL PowerEdge R6515 servers hosting several virtual machines. The disk pools consist of thirteen DELL PowerEdge R740xd2 2U servers using RAID 6 and the XFS file system. The raw total size is 6 760 TB, which is an increase by more than a factor of 3 compared to the previous situation. The Local Area Network (LAN) speed of the storage cluster increased from 40 Gb/s to 50 Gb/s (2*25 Gb/s). The Wide Area Network (WAN) speed increased from 40 Gb/s to 100 Gb/s. The new system is more power efficient as it uses 4.4-5.3 kW while the old system uses 6 kW.

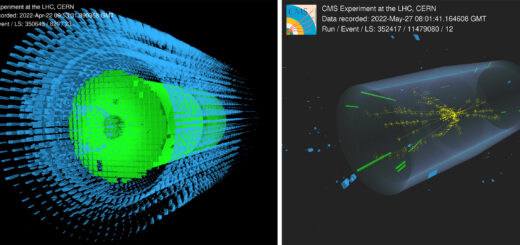

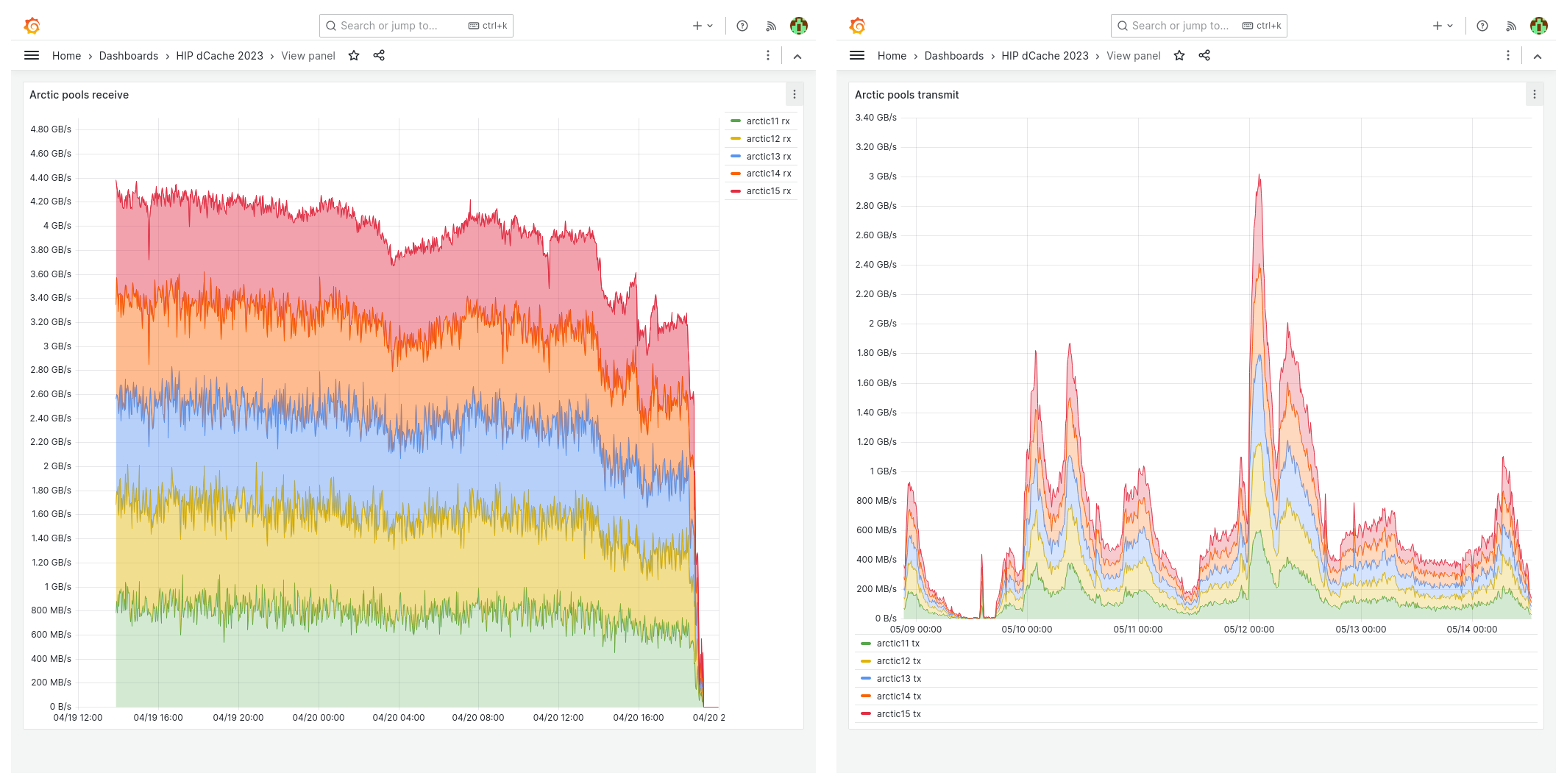

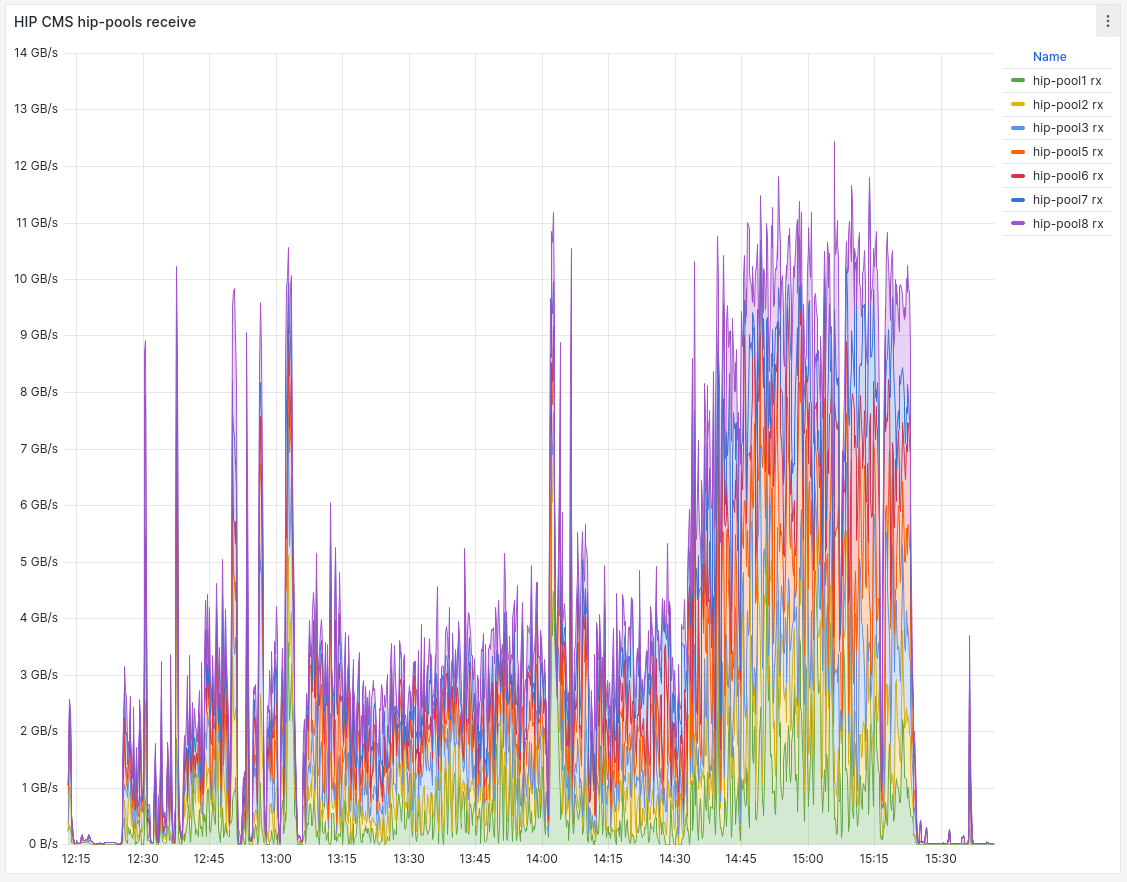

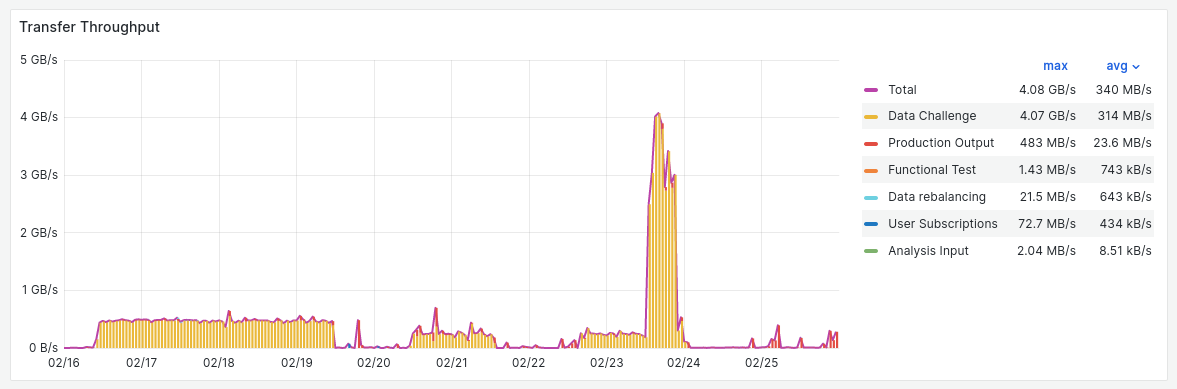

The virtual machine resources were tuned to optimize the performance and the Linux TCP buffer sizes were increased to optimize the achievable transfer speeds. The performance of the new ALICE and CMS dCache systems turned out to be very good when they were taken into usage, see Figure 1 and 2.

3 GB/s reading.

writing.

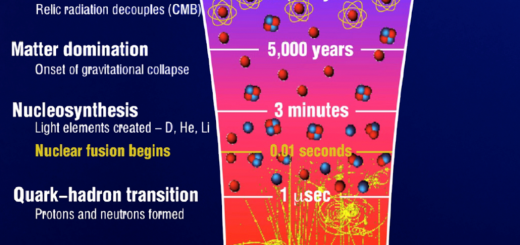

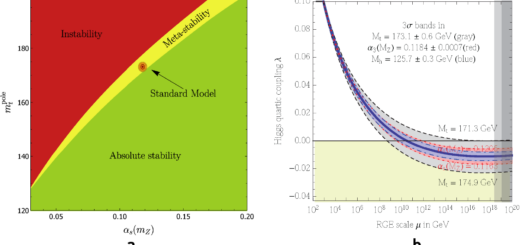

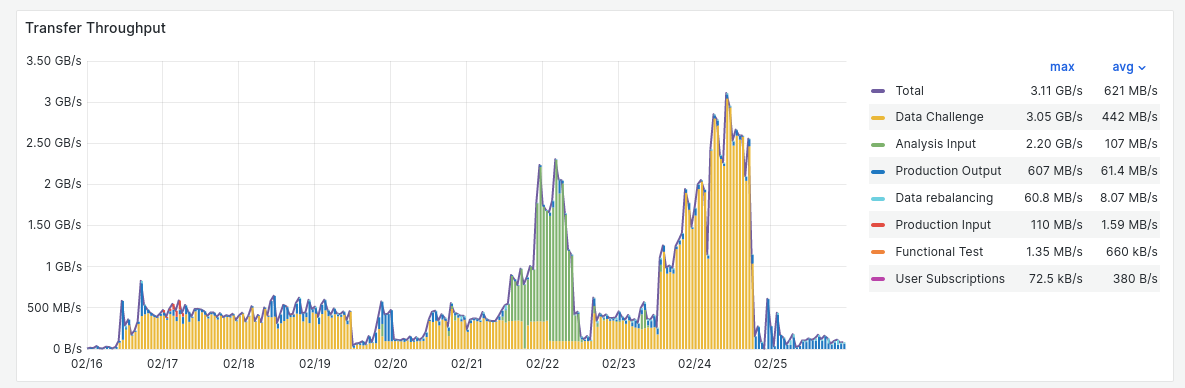

WLCG organises every few years Data Challenges to make sure that the increasing LHC computing requirements can be met. The LHC will be upgraded to the High-Luminosity LHC (HL-LHC) for Run 4 taking place during the years 2029-2032. The peak HL-LHC luminosity (approximately beam intensity) is expected to be 4 to more than 7 times the LHC nominal design luminosity. HIP participated in February 2024 in the Data Challenge 2024 (DC24) which was run simultaneously for all the big LHC-experiments ALICE, ATLAS, CMS and LHCb. The goal of DC24 was to demonstrate that data transfers at the 25 % scale of HL-LHC requirements can be reached and sustained on top of all normal data transfer operations.

In CMS the CERN Tier-0, the Tier-1 sites and the Tier-2 sites participated in DC24. For CMS a major goal was exceeding 1 Tb/s (125 GB/s) in total traffic, which was achieved.

T2_FI_HIP participated succesfully in DC24 with the new dCache system and the planned targets of 400-500 MB/s could be met as well as exceeded with transfers peaking above 3 GB/s, see Figure 3 and 4.

The new ALICE and CMS dCache storage has increased capacity, network bandwidth and performance. The new dCache system has succesfully met the DC24 CMS HL-LHC transfer targets. The new dCache storage will be used during the LHC Run 3. In 2026 the next Data Challenge at the scale of 50 % of HL-LHC data transfers will be performed. The current HIP system is expected to perform well then also. For Run 4 with the HL-LHC a storage

capacity upgrade will be needed.

Tomas Lindén

Project Leader, Tier-2 Operations

Helsinki Institute of Physics